私的AI研究会 > DLFS

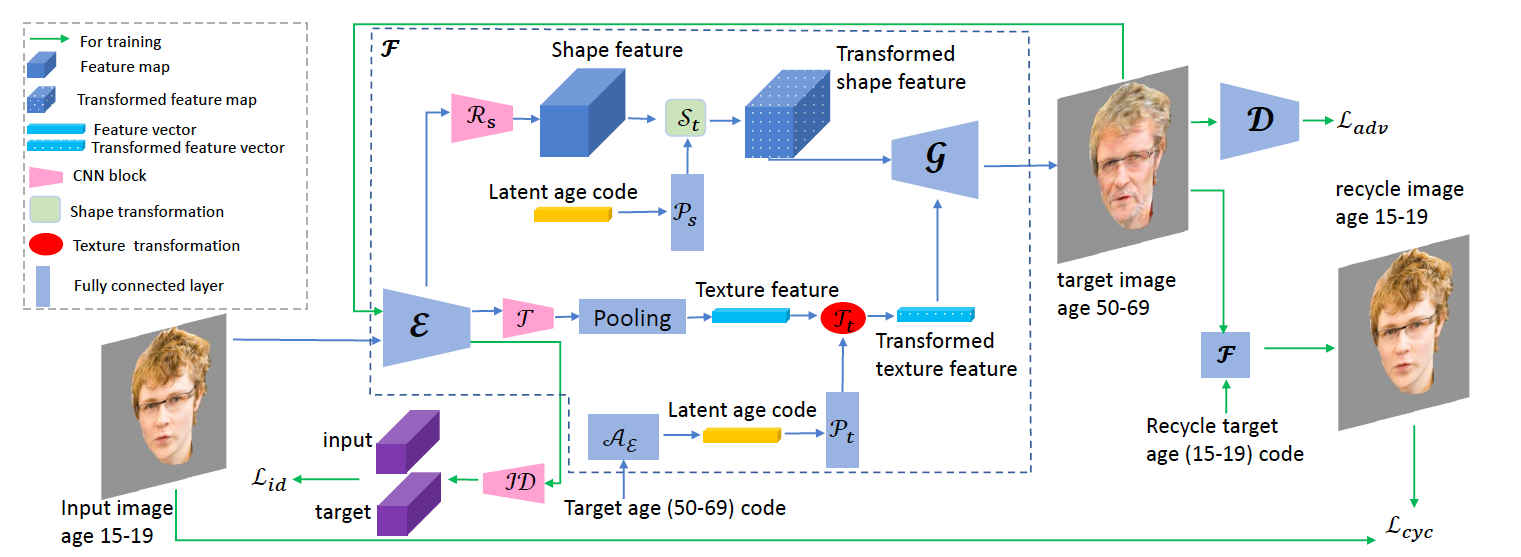

AI技術「DLFS(Disentangled Lifespan Face Synthesis)」で人間の年齢による顔の変化をシミュレーションする。

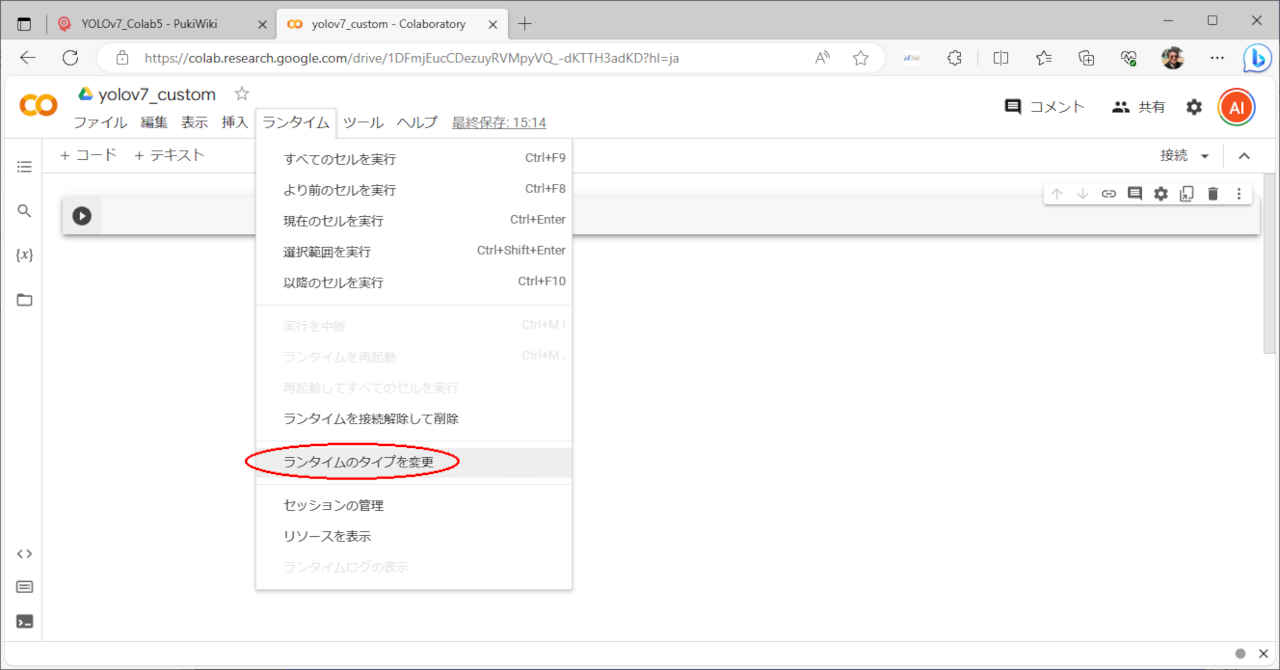

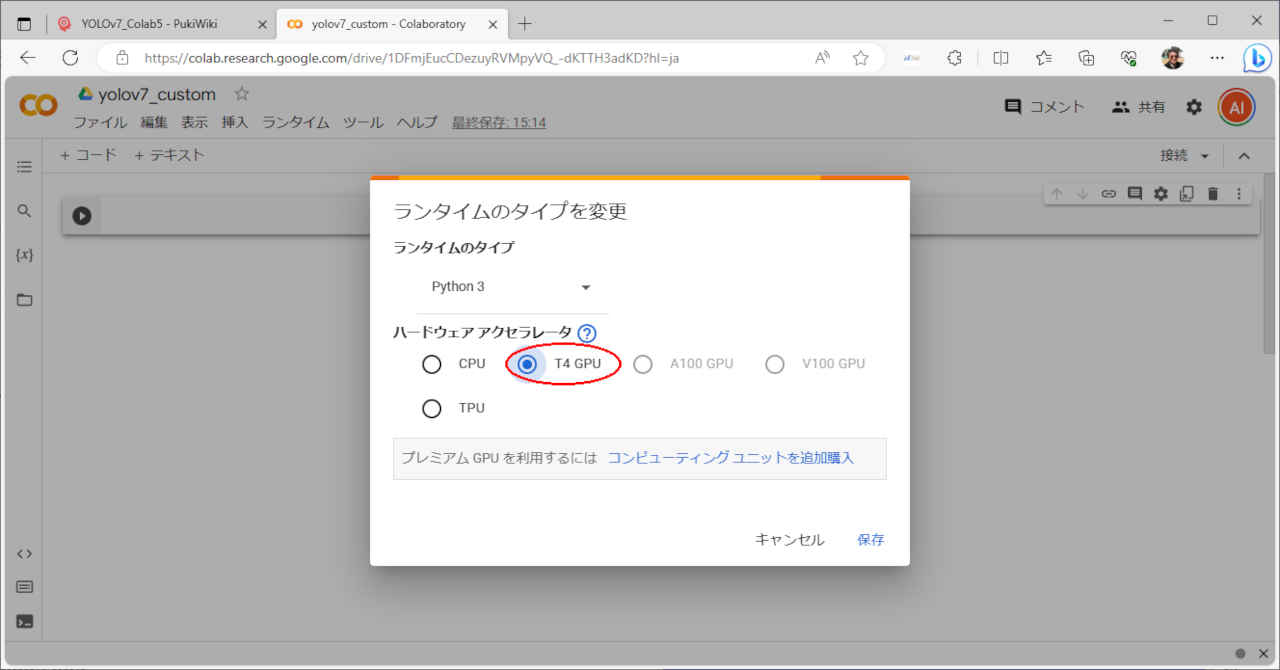

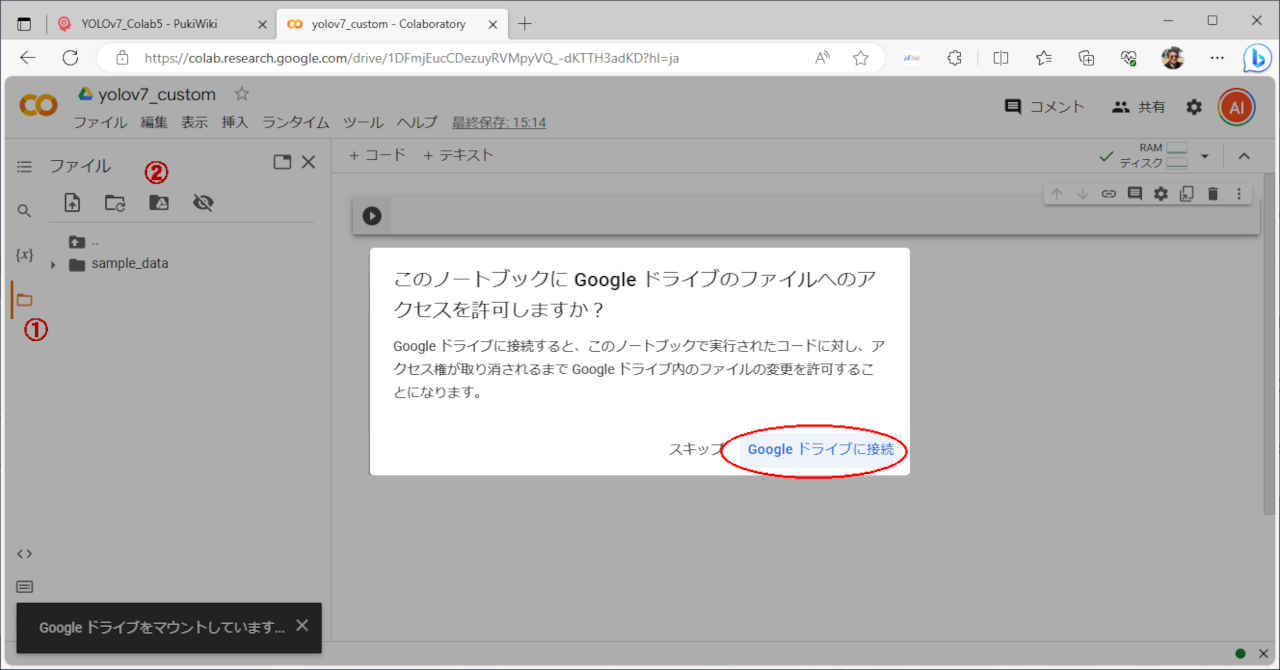

### セットアップ~

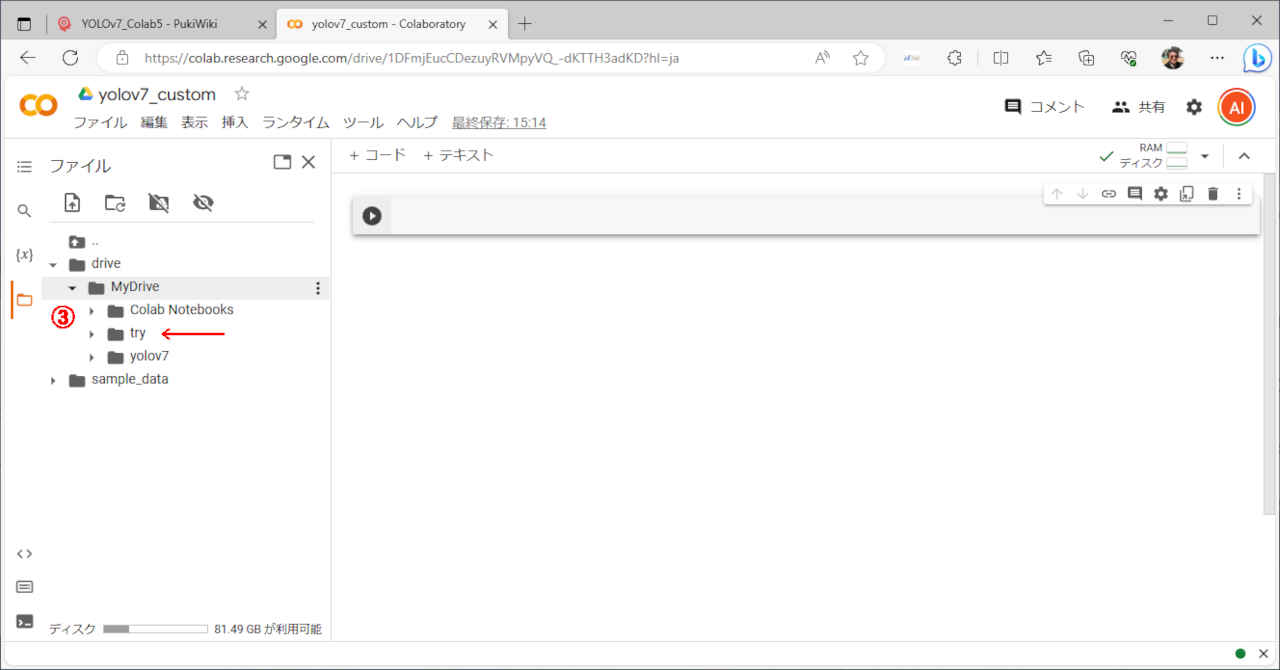

# カレントディレクトリを「MyDrive/try」へ移動する

%cd /content/drive/MyDrive/try

# githubのコードをコピー

!git clone https://github.com/SenHe/DLFS.git

%cd DLFS/

# ライブラリーのインストール

!pip3 install -r requirements.txt

# 補助モデルのダウンロード

!python download_models.py

# 学習済みモデルのダウンロード

import gdown

!mkdir checkpoints

%cd checkpoints

gdown.download('https://drive.google.com/u/0/uc?id=1pB4mufFtzbJSxxv_2iFrBPD3vp_Ef-n3&export=download', 'males_model.zip', quiet=False)

!unzip males_model.zip

gdown.download('https://drive.google.com/u/0/uc?id=1z0s_j3Khs7-352bMvz8RSnrR53vvdbiI&export=download', 'females_model.zip', quiet=False)

!unzip females_model.zip

%cd ..

# サンプル画像ダウンロード

gdown.download('https://drive.google.com/uc?id=1ruwDizjnzd3scR1QvpXGWLXywY8_W0yj', './images.zip', quiet=False)

!unzip images.zip

/content/drive/MyDrive/try

Cloning into 'DLFS'...

remote: Enumerating objects: 174, done.

remote: Counting objects: 100% (117/117), done.

remote: Compressing objects: 100% (74/74), done.

remote: Total 174 (delta 50), reused 106 (delta 41), pack-reused 57

Receiving objects: 100% (174/174), 69.80 MiB | 15.74 MiB/s, done.

Resolving deltas: 100% (61/61), done.

Updating files: 100% (58/58), done.

/content/drive/MyDrive/try/DLFS

Requirement already satisfied: opencv-python in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 1)) (4.8.0.76)

Collecting visdom (from -r requirements.txt (line 2))

Downloading visdom-0.2.4.tar.gz (1.4 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.4/1.4 MB 10.8 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Collecting dominate (from -r requirements.txt (line 3))

Downloading dominate-2.8.0-py2.py3-none-any.whl (29 kB)

Requirement already satisfied: numpy in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 4)) (1.23.5)

Requirement already satisfied: scipy in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 5)) (1.11.3)

Requirement already satisfied: pillow in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 6)) (9.4.0)

Collecting unidecode (from -r requirements.txt (line 7))

Downloading Unidecode-1.3.7-py3-none-any.whl (235 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 235.5/235.5 kB 16.2 MB/s eta 0:00:00

Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 8)) (2.31.0)

Requirement already satisfied: tqdm in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 9)) (4.66.1)

Requirement already satisfied: dlib in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 10)) (19.24.2)

Requirement already satisfied: tornado in /usr/local/lib/python3.10/dist-packages (from visdom->-r requirements.txt (line 2)) (6.3.2)

Requirement already satisfied: six in /usr/local/lib/python3.10/dist-packages (from visdom->-r requirements.txt (line 2)) (1.16.0)

Collecting jsonpatch (from visdom->-r requirements.txt (line 2))

Downloading jsonpatch-1.33-py2.py3-none-any.whl (12 kB)

Requirement already satisfied: websocket-client in /usr/local/lib/python3.10/dist-packages (from visdom->-r requirements.txt (line 2)) (1.6.4)

Requirement already satisfied: networkx in /usr/local/lib/python3.10/dist-packages (from visdom->-r requirements.txt (line 2)) (3.2)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests->-r requirements.txt (line 8)) (3.3.1)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->-r requirements.txt (line 8)) (3.4)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->-r requirements.txt (line 8)) (2.0.7)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->-r requirements.txt (line 8)) (2023.7.22)

Collecting jsonpointer>=1.9 (from jsonpatch->visdom->-r requirements.txt (line 2))

Downloading jsonpointer-2.4-py2.py3-none-any.whl (7.8 kB)

Building wheels for collected packages: visdom

Building wheel for visdom (setup.py) ... done

Created wheel for visdom: filename=visdom-0.2.4-py3-none-any.whl size=1408194 sha256=b2ee95966998bde11d82ed0c3d2065af0e10b922a9b86436a0fbe9b7ff8a4138

Stored in directory: /root/.cache/pip/wheels/42/29/49/5bed207bac4578e4d2c0c5fc0226bfd33a7e2953ea56356855

Successfully built visdom

Installing collected packages: unidecode, jsonpointer, dominate, jsonpatch, visdom

Successfully installed dominate-2.8.0 jsonpatch-1.33 jsonpointer-2.4 unidecode-1.3.7 visdom-0.2.4

Downloading face landmarks shape predictor

100% 99.7M/99.7M [00:09<00:00, 10.2MB/s]

Done!

Downloading DeeplabV3 backbone Resnet Model parameters

0% 2.26k/178M [00:00<4:30:43, 11.0kB/s]Google Drive download failed.

Trying do download from alternate server

0% 0.00/178M [00:00<?, ?B/s]

0% 0.00/178M [00:00<?, ?B/s]

0% 131k/178M [00:00<04:55, 603kB/s]

5% 8.91M/178M [00:00<00:04, 35.2MB/s]

12% 21.2M/178M [00:00<00:02, 66.8MB/s]

17% 29.6M/178M [00:00<00:02, 69.7MB/s]

22% 38.4M/178M [00:00<00:01, 75.3MB/s]

27% 47.7M/178M [00:00<00:01, 80.4MB/s]

32% 56.6M/178M [00:00<00:01, 83.1MB/s]

37% 66.8M/178M [00:00<00:01, 85.5MB/s]

42% 75.8M/178M [00:01<00:01, 84.7MB/s]

47% 84.4M/178M [00:01<00:01, 82.0MB/s]

53% 94.2M/178M [00:01<00:00, 86.5MB/s]

58% 104M/178M [00:01<00:00, 89.7MB/s]

64% 114M/178M [00:01<00:00, 90.6MB/s]

69% 123M/178M [00:01<00:00, 90.3MB/s]

74% 132M/178M [00:01<00:00, 87.2MB/s]

79% 141M/178M [00:01<00:00, 86.7MB/s]

84% 149M/178M [00:01<00:00, 84.8MB/s]

89% 158M/178M [00:02<00:00, 81.3MB/s]

93% 166M/178M [00:02<00:00, 79.9MB/s]

100% 178M/178M [00:02<00:00, 78.4MB/s]

0% 2.26k/178M [00:02<54:45:16, 904B/s]

Done!

Downloading DeeplabV3 Model parameters

0% 2.26k/464M [00:00<12:54:31, 9.99kB/s]Google Drive download failed.

Trying do download from alternate server

0% 0.00/464M [00:00<?, ?B/s]

0% 0.00/464M [00:00<?, ?B/s]

0% 131k/464M [00:00<08:30, 909kB/s]

2% 9.18M/464M [00:00<00:10, 45.3MB/s]

4% 20.8M/464M [00:00<00:05, 74.6MB/s]

7% 32.5M/464M [00:00<00:04, 90.0MB/s]

9% 43.1M/464M [00:00<00:04, 95.2MB/s]

11% 53.1M/464M [00:00<00:04, 96.4MB/s]

14% 63.0M/464M [00:00<00:04, 96.2MB/s]

16% 73.1M/464M [00:00<00:04, 97.6MB/s]

18% 83.1M/464M [00:00<00:04, 93.1MB/s]

20% 93.3M/464M [00:01<00:03, 95.6MB/s]

22% 103M/464M [00:01<00:03, 94.9MB/s]

24% 113M/464M [00:01<00:03, 95.5MB/s]

26% 123M/464M [00:01<00:03, 96.6MB/s]

29% 133M/464M [00:01<00:03, 96.5MB/s]

31% 142M/464M [00:01<00:03, 96.4MB/s]

33% 152M/464M [00:01<00:03, 97.3MB/s]

35% 163M/464M [00:01<00:03, 99.5MB/s]

37% 173M/464M [00:01<00:02, 99.7MB/s]

40% 184M/464M [00:01<00:02, 102MB/s]

42% 195M/464M [00:02<00:02, 104MB/s]

44% 205M/464M [00:02<00:02, 102MB/s]

46% 216M/464M [00:02<00:02, 104MB/s]

49% 227M/464M [00:02<00:02, 104MB/s]

51% 237M/464M [00:02<00:02, 104MB/s]

53% 248M/464M [00:02<00:02, 106MB/s]

56% 259M/464M [00:02<00:01, 104MB/s]

58% 269M/464M [00:02<00:02, 96.6MB/s]

60% 279M/464M [00:02<00:02, 89.1MB/s]

62% 289M/464M [00:03<00:01, 92.0MB/s]

64% 299M/464M [00:03<00:02, 81.2MB/s]

66% 308M/464M [00:03<00:01, 84.8MB/s]

69% 318M/464M [00:03<00:01, 89.1MB/s]

71% 329M/464M [00:03<00:01, 93.5MB/s]

73% 338M/464M [00:03<00:01, 78.4MB/s]

75% 347M/464M [00:03<00:01, 77.0MB/s]

76% 355M/464M [00:03<00:01, 71.8MB/s]

78% 362M/464M [00:04<00:01, 61.0MB/s]

79% 369M/464M [00:04<00:02, 43.7MB/s]

81% 374M/464M [00:04<00:02, 36.0MB/s]

82% 379M/464M [00:04<00:02, 35.2MB/s]

82% 383M/464M [00:04<00:02, 36.4MB/s]

83% 388M/464M [00:04<00:02, 38.4MB/s]

84% 392M/464M [00:05<00:01, 40.1MB/s]

86% 399M/464M [00:05<00:01, 47.3MB/s]

88% 408M/464M [00:05<00:01, 56.8MB/s]

89% 414M/464M [00:05<00:00, 51.3MB/s]

90% 419M/464M [00:05<00:00, 51.0MB/s]

91% 425M/464M [00:05<00:00, 52.7MB/s]

93% 434M/464M [00:05<00:00, 61.9MB/s]

95% 441M/464M [00:05<00:00, 66.2MB/s]

97% 450M/464M [00:05<00:00, 72.2MB/s]

100% 464M/464M [00:06<00:00, 75.6MB/s]

0% 2.26k/464M [00:06<369:19:09, 349B/s]

Done!

/content/drive/MyDrive/try/DLFS/checkpoints

Downloading...

From: https://drive.google.com/u/0/uc?id=1pB4mufFtzbJSxxv_2iFrBPD3vp_Ef-n3&export=download

To: /content/drive/MyDrive/try/DLFS/checkpoints/males_model.zip

100%|██████████| 204M/204M [00:05<00:00, 39.7MB/s]

Archive: males_model.zip

creating: males_model/

inflating: males_model/latest_net_G.pth

inflating: males_model/.DS_Store

inflating: __MACOSX/males_model/._.DS_Store

inflating: males_model/latest_net_g_running.pth

Downloading...

From: https://drive.google.com/u/0/uc?id=1z0s_j3Khs7-352bMvz8RSnrR53vvdbiI&export=download

To: /content/drive/MyDrive/try/DLFS/checkpoints/females_model.zip

100%|██████████| 204M/204M [00:08<00:00, 24.9MB/s]

Archive: females_model.zip

creating: females_model/

inflating: females_model/latest_net_G.pth

inflating: females_model/latest_net_g_running.pth

/content/drive/MyDrive/try/DLFS

Downloading...

From: https://drive.google.com/uc?id=1ruwDizjnzd3scR1QvpXGWLXywY8_W0yj

To: /content/drive/MyDrive/try/DLFS/images.zip

100%|██████████| 1.25M/1.25M [00:00<00:00, 114MB/s]Archive: images.zip

inflating: images/01.jpg

inflating: images/02.jpg

inflating: images/03.jpg

inflating: images/04.jpg

inflating: images/05.jpg

inflating: images/06.jpg

inflating: images/07.jpg

inflating: images/08.jpg

inflating: images/09.jpg

inflating: images/10.jpg

inflating: images/11.jpg

inflating: images/12.jpg

inflating: images/13.jpg

inflating: images/14.jpg

マイドライブ

┗ try

┗ DLFS

┠ images

┃ ┠ f_tsuchiya_1.jpg

┃ ┠ izutsu_m2.jpg

┃ ┃ :

┃

┗ dlfs.test.pycd /content/drive/MyDrive/try/DLFS・結果表示

/content/drive/MyDrive/try/DLFS

!pip install unidecode dominate

Collecting unidecode

Downloading Unidecode-1.3.7-py3-none-any.whl (235 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 235.5/235.5 kB 4.5 MB/s eta 0:00:00

Collecting dominate

Downloading dominate-2.8.0-py2.py3-none-any.whl (29 kB)

Installing collected packages: unidecode, dominate

Successfully installed dominate-2.8.0 unidecode-1.3.7

!python dlfs_test.py

------------ Options -------------

activation: lrelu

batchSize: 1

checkpoints_dir: ./checkpoints

compare_to_trained_class: 1

compare_to_trained_outputs: False

conv_weight_norm: True

dataroot: ./datasets/males/

debug_mode: False

decoder_norm: pixel

deploy: False

display_id: 1

display_port: 8097

display_single_pane_ncols: 6

display_winsize: 256

encoder_type: distan

fineSize: 256

full_progression: False

gen_dim_per_style: 50

gpu_ids: [0]

how_many: 50

id_enc_norm: pixel

image_path_file: None

in_the_wild: False

input_nc: 3

interp_step: 0.5

isTrain: False

loadSize: 256

make_video: False

max_dataset_size: inf

nThreads: 4

n_adaptive_blocks: 4

n_downsample: 2

name: debug

ngf: 64

no_cond_noise: False

no_flip: False

no_moving_avg: False

normalize_mlp: True

ntest: inf

output_nc: 3

phase: test

random_seed: -1

resize_or_crop: resize_and_crop

results_dir: ./results/

serial_batches: False

sort_classes: True

sort_order: ['0-2', '3-6', '7-9', '15-19', '30-39', '50-69']

trained_class_jump: 1

traverse: False

use_modulated_conv: True

use_resblk_pixel_norm: False

verbose: False

which_epoch: latest

-------------- End ----------------

AgingDataLoader

dataset [MulticlassUnalignedDataset] was created

Distan_Generator(

(id_encoder): Distan_Encoder_1(

(encoder1): Sequential(

(0): ReflectionPad2d((3, 3, 3, 3))

(1): EqualConv2d(

(conv): Conv2d(3, 64, kernel_size=(7, 7), stride=(1, 1))

)

(2): PixelNorm()

(3): ReLU(inplace=True)

(4): ReflectionPad2d((1, 1, 1, 1))

(5): EqualConv2d(

(conv): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2))

)

(6): PixelNorm()

(7): ReLU(inplace=True)

(8): ReflectionPad2d((1, 1, 1, 1))

(9): EqualConv2d(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2))

)

(10): PixelNorm()

(11): ReLU(inplace=True)

)

(encoder2): Sequential(

(0): ResnetBlock(

(conv_block): Sequential(

(0): ReflectionPad2d((1, 1, 1, 1))

(1): EqualConv2d(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1))

)

(2): PixelNorm()

(3): ReLU(inplace=True)

(4): ReflectionPad2d((1, 1, 1, 1))

(5): EqualConv2d(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1))

)

(6): PixelNorm()

)

)

(1): ResnetBlock(

(conv_block): Sequential(

(0): ReflectionPad2d((1, 1, 1, 1))

(1): EqualConv2d(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1))

)

(2): PixelNorm()

(3): ReLU(inplace=True)

(4): ReflectionPad2d((1, 1, 1, 1))

(5): EqualConv2d(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1))

)

(6): PixelNorm()

)

)

(2): ResnetBlock(

(conv_block): Sequential(

(0): ReflectionPad2d((1, 1, 1, 1))

(1): EqualConv2d(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1))

)

(2): PixelNorm()

(3): ReLU(inplace=True)

(4): ReflectionPad2d((1, 1, 1, 1))

(5): EqualConv2d(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1))

)

(6): PixelNorm()

)

)

)

(id_layer): Sequential(

(0): ResnetBlock(

(conv_block): Sequential(

(0): ReflectionPad2d((1, 1, 1, 1))

(1): EqualConv2d(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1))

)

(2): PixelNorm()

(3): ReLU(inplace=True)

(4): ReflectionPad2d((1, 1, 1, 1))

(5): EqualConv2d(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1))

)

(6): PixelNorm()

)

)

(1): ReflectionPad2d((1, 1, 1, 1))

(2): EqualConv2d(

(conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2))

)

(3): PixelNorm()

(4): ReLU(inplace=True)

)

(structure_layer): ResnetBlock(

(conv_block): Sequential(

(0): ReflectionPad2d((1, 1, 1, 1))

(1): EqualConv2d(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1))

)

(2): PixelNorm()

(3): ReLU(inplace=True)

(4): ReflectionPad2d((1, 1, 1, 1))

(5): EqualConv2d(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1))

)

(6): PixelNorm()

)

)

(text_layer): Sequential(

(0): ReflectionPad2d((1, 1, 1, 1))

(1): EqualConv2d(

(conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2))

)

(2): PixelNorm()

(3): ReLU(inplace=True)

(4): ReflectionPad2d((1, 1, 1, 1))

(5): EqualConv2d(

(conv): Conv2d(512, 1024, kernel_size=(3, 3), stride=(2, 2))

)

(6): PixelNorm()

(7): ReLU(inplace=True)

(8): AdaptiveAvgPool2d(output_size=1)

(9): EqualConv2d(

(conv): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))

)

)

)

(age_encoder): AgeEncoder(

(encoder): Sequential(

(0): ReflectionPad2d((3, 3, 3, 3))

(1): EqualConv2d(

(conv): Conv2d(3, 64, kernel_size=(7, 7), stride=(1, 1))

)

(2): LeakyReLU(negative_slope=0.2, inplace=True)

(3): ReflectionPad2d((1, 1, 1, 1))

(4): EqualConv2d(

(conv): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2))

)

(5): LeakyReLU(negative_slope=0.2, inplace=True)

(6): ReflectionPad2d((1, 1, 1, 1))

(7): EqualConv2d(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2))

)

(8): LeakyReLU(negative_slope=0.2, inplace=True)

(9): ReflectionPad2d((1, 1, 1, 1))

(10): EqualConv2d(

(conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2))

)

(11): LeakyReLU(negative_slope=0.2, inplace=True)

(12): ReflectionPad2d((1, 1, 1, 1))

(13): EqualConv2d(

(conv): Conv2d(512, 1024, kernel_size=(3, 3), stride=(2, 2))

)

(14): LeakyReLU(negative_slope=0.2, inplace=True)

(15): EqualConv2d(

(conv): Conv2d(1024, 300, kernel_size=(1, 1), stride=(1, 1))

)

)

)

(decoder): Distan_StyledDecoder(

(StyledConvBlock_0): StyledConvBlock(

(conv0): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(pxl_norm0): PixelNorm()

(actvn0): LeakyReLU(negative_slope=0.2, inplace=True)

(conv1): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(pxl_norm1): PixelNorm()

(actvn1): LeakyReLU(negative_slope=0.2, inplace=True)

)

(StyledConvBlock_1): StyledConvBlock(

(conv0): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(pxl_norm0): PixelNorm()

(actvn0): LeakyReLU(negative_slope=0.2, inplace=True)

(conv1): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(pxl_norm1): PixelNorm()

(actvn1): LeakyReLU(negative_slope=0.2, inplace=True)

)

(StyledConvBlock_2): StyledConvBlock(

(conv0): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(pxl_norm0): PixelNorm()

(actvn0): LeakyReLU(negative_slope=0.2, inplace=True)

(conv1): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(pxl_norm1): PixelNorm()

(actvn1): LeakyReLU(negative_slope=0.2, inplace=True)

)

(StyledConvBlock_3): StyledConvBlock(

(conv0): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(pxl_norm0): PixelNorm()

(actvn0): LeakyReLU(negative_slope=0.2, inplace=True)

(conv1): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(pxl_norm1): PixelNorm()

(actvn1): LeakyReLU(negative_slope=0.2, inplace=True)

)

(StyledConvBlock_up0): StyledConvBlock(

(conv0): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

(upsampler): Upsample(scale_factor=2.0, mode='nearest')

)

(pxl_norm0): PixelNorm()

(actvn0): LeakyReLU(negative_slope=0.2, inplace=True)

(conv1): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=128, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(pxl_norm1): PixelNorm()

(actvn1): LeakyReLU(negative_slope=0.2, inplace=True)

)

(StyledConvBlock_up1): StyledConvBlock(

(conv0): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=128, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

(upsampler): Upsample(scale_factor=2.0, mode='nearest')

)

(pxl_norm0): PixelNorm()

(actvn0): LeakyReLU(negative_slope=0.2, inplace=True)

(conv1): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=64, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(pxl_norm1): PixelNorm()

(actvn1): LeakyReLU(negative_slope=0.2, inplace=True)

)

(conv_img): Sequential(

(0): EqualConv2d(

(conv): Conv2d(64, 3, kernel_size=(1, 1), stride=(1, 1))

)

(1): Tanh()

)

(mlp): MLP(

(model): Sequential(

(0): PixelNorm()

(1): EqualLinear(

(linear): Linear(in_features=300, out_features=256, bias=True)

)

(2): LeakyReLU(negative_slope=0.2, inplace=True)

(3): PixelNorm()

(4): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(5): LeakyReLU(negative_slope=0.2, inplace=True)

(6): PixelNorm()

(7): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(8): LeakyReLU(negative_slope=0.2, inplace=True)

(9): PixelNorm()

(10): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(11): LeakyReLU(negative_slope=0.2, inplace=True)

(12): PixelNorm()

(13): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(14): LeakyReLU(negative_slope=0.2, inplace=True)

(15): PixelNorm()

(16): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(17): LeakyReLU(negative_slope=0.2, inplace=True)

(18): PixelNorm()

(19): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(20): LeakyReLU(negative_slope=0.2, inplace=True)

(21): PixelNorm()

(22): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(23): PixelNorm()

)

)

(s_transform): ModulatedConv2d(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

(blur): Blur()

(padding): ReflectionPad2d((1, 1, 1, 1))

)

(t_transform): Modulated_1D(

(mlp_class_std): Sequential(

(0): EqualLinear(

(linear): Linear(in_features=256, out_features=256, bias=True)

)

(1): PixelNorm()

)

)

(t_denorm): Linear(in_features=256, out_features=2, bias=True)

)

)

./checkpoints/males_model/latest_net_g_running.pth

/content/drive/MyDrive/try/DLFS/models_distan/LATS_model.py:621: UserWarning: The torch.cuda.*DtypeTensor constructors are no longer recommended. It's best to use methods such as torch.tensor(data, dtype=*, device='cuda') to create tensors. (Triggered internally at ../torch/csrc/tensor/python_tensor.cpp:83.)

self.fake_B = self.Tensor(self.numClasses, sz[0], sz[1], sz[2], sz[3])

ffmpeg version 4.4.2-0ubuntu0.22.04.1 Copyright (c) 2000-2021 the FFmpeg developers

built with gcc 11 (Ubuntu 11.2.0-19ubuntu1)

configuration: --prefix=/usr --extra-version=0ubuntu0.22.04.1 --toolchain=hardened --libdir=/usr/lib/x86_64-linux-gnu --incdir=/usr/include/x86_64-linux-gnu --arch=amd64 --enable-gpl --disable-stripping --enable-gnutls --enable-ladspa --enable-libaom --enable-libass --enable-libbluray --enable-libbs2b --enable-libcaca --enable-libcdio --enable-libcodec2 --enable-libdav1d --enable-libflite --enable-libfontconfig --enable-libfreetype --enable-libfribidi --enable-libgme --enable-libgsm --enable-libjack --enable-libmp3lame --enable-libmysofa --enable-libopenjpeg --enable-libopenmpt --enable-libopus --enable-libpulse --enable-librabbitmq --enable-librubberband --enable-libshine --enable-libsnappy --enable-libsoxr --enable-libspeex --enable-libsrt --enable-libssh --enable-libtheora --enable-libtwolame --enable-libvidstab --enable-libvorbis --enable-libvpx --enable-libwebp --enable-libx265 --enable-libxml2 --enable-libxvid --enable-libzimg --enable-libzmq --enable-libzvbi --enable-lv2 --enable-omx --enable-openal --enable-opencl --enable-opengl --enable-sdl2 --enable-pocketsphinx --enable-librsvg --enable-libmfx --enable-libdc1394 --enable-libdrm --enable-libiec61883 --enable-chromaprint --enable-frei0r --enable-libx264 --enable-shared

libavutil 56. 70.100 / 56. 70.100

libavcodec 58.134.100 / 58.134.100

libavformat 58. 76.100 / 58. 76.100

libavdevice 58. 13.100 / 58. 13.100

libavfilter 7.110.100 / 7.110.100

libswscale 5. 9.100 / 5. 9.100

libswresample 3. 9.100 / 3. 9.100

libpostproc 55. 9.100 / 55. 9.100

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from './results/out.mp4':

Metadata:

major_brand : isom

minor_version : 512

compatible_brands: isomiso2mp41

encoder : Lavf59.27.100

Duration: 00:00:05.05, start: 0.000000, bitrate: 293 kb/s

Stream #0:0(und): Video: mpeg4 (Simple Profile) (mp4v / 0x7634706D), yuv420p, 256x256 [SAR 1:1 DAR 1:1], 291 kb/s, 20 fps, 20 tbr, 10240 tbn, 20 tbc (default)

Metadata:

handler_name : VideoHandler

vendor_id : [0][0][0][0]

Stream mapping:

Stream #0:0 -> #0:0 (mpeg4 (native) -> h264 (libx264))

Press [q] to stop, [?] for help

[libx264 @ 0x5c312a18bb80] using SAR=1/1

[libx264 @ 0x5c312a18bb80] using cpu capabilities: MMX2 SSE2Fast SSSE3 SSE4.2 AVX FMA3 BMI2 AVX2

[libx264 @ 0x5c312a18bb80] profile High, level 1.2, 4:2:0, 8-bit

[libx264 @ 0x5c312a18bb80] 264 - core 163 r3060 5db6aa6 - H.264/MPEG-4 AVC codec - Copyleft 2003-2021 - http://www.videolan.org/x264.html - options: cabac=1 ref=3 deblock=1:0:0 analyse=0x3:0x113 me=hex subme=7 psy=1 psy_rd=1.00:0.00 mixed_ref=1 me_range=16 chroma_me=1 trellis=1 8x8dct=1 cqm=0 deadzone=21,11 fast_pskip=1 chroma_qp_offset=-2 threads=3 lookahead_threads=1 sliced_threads=0 nr=0 decimate=1 interlaced=0 bluray_compat=0 constrained_intra=0 bframes=3 b_pyramid=2 b_adapt=1 b_bias=0 direct=1 weightb=1 open_gop=0 weightp=2 keyint=250 keyint_min=20 scenecut=40 intra_refresh=0 rc_lookahead=40 rc=crf mbtree=1 crf=23.0 qcomp=0.60 qpmin=0 qpmax=69 qpstep=4 ip_ratio=1.40 aq=1:1.00

Output #0, mp4, to 'output.mp4':

Metadata:

major_brand : isom

minor_version : 512

compatible_brands: isomiso2mp41

encoder : Lavf58.76.100

Stream #0:0(und): Video: h264 (avc1 / 0x31637661), yuv420p(progressive), 256x256 [SAR 1:1 DAR 1:1], q=2-31, 20 fps, 10240 tbn (default)

Metadata:

handler_name : VideoHandler

vendor_id : [0][0][0][0]

encoder : Lavc58.134.100 libx264

Side data:

cpb: bitrate max/min/avg: 0/0/0 buffer size: 0 vbv_delay: N/A

frame= 101 fps=0.0 q=-1.0 Lsize= 99kB time=00:00:04.90 bitrate= 166.0kbits/s speed=7.41x

video:98kB audio:0kB subtitle:0kB other streams:0kB global headers:0kB muxing overhead: 1.307580%

[libx264 @ 0x5c312a18bb80] frame I:1 Avg QP:17.14 size: 5428

[libx264 @ 0x5c312a18bb80] frame P:99 Avg QP:23.06 size: 951

[libx264 @ 0x5c312a18bb80] frame B:1 Avg QP:13.00 size: 58

[libx264 @ 0x5c312a18bb80] consecutive B-frames: 98.0% 2.0% 0.0% 0.0%

[libx264 @ 0x5c312a18bb80] mb I I16..4: 47.3% 39.8% 12.9%

[libx264 @ 0x5c312a18bb80] mb P I16..4: 0.3% 1.1% 0.1% P16..4: 22.0% 9.5% 7.2% 0.0% 0.0% skip:59.9%

[libx264 @ 0x5c312a18bb80] mb B I16..4: 1.2% 0.0% 0.0% B16..8: 10.9% 0.0% 0.0% direct: 0.8% skip:87.1% L0:10.7% L1:89.3% BI: 0.0%

[libx264 @ 0x5c312a18bb80] 8x8 transform intra:60.4% inter:64.6%

[libx264 @ 0x5c312a18bb80] coded y,uvDC,uvAC intra: 35.5% 46.1% 19.5% inter: 19.1% 12.2% 0.7%

[libx264 @ 0x5c312a18bb80] i16 v,h,dc,p: 74% 14% 12% 0%

[libx264 @ 0x5c312a18bb80] i8 v,h,dc,ddl,ddr,vr,hd,vl,hu: 15% 8% 55% 2% 5% 3% 4% 5% 3%

[libx264 @ 0x5c312a18bb80] i4 v,h,dc,ddl,ddr,vr,hd,vl,hu: 24% 13% 20% 7% 12% 9% 7% 4% 5%

[libx264 @ 0x5c312a18bb80] i8c dc,h,v,p: 50% 15% 30% 5%

[libx264 @ 0x5c312a18bb80] Weighted P-Frames: Y:6.1% UV:0.0%

[libx264 @ 0x5c312a18bb80] ref P L0: 71.9% 22.7% 3.9% 1.4% 0.1%

[libx264 @ 0x5c312a18bb80] ref B L0: 66.7% 33.3%

[libx264 @ 0x5c312a18bb80] kb/s:157.86

convert complete.

### 結果表示

# mp4動画の再生

from IPython.display import HTML

from base64 import b64encode

mp4 = open('./output.mp4', 'rb').read()

data_url = 'data:video/mp4;base64,' + b64encode(mp4).decode()

HTML(f"""<video width="50%" height="50%" controls><source src="{data_url}" type="video/mp4"></video>""")

| コマンドオプション | デフォールト設定 | 意味 |

| -h, --help | - | ヘルプ表示 |

| --input_dir | images | 入力画像のディレクトリ名 |

| --input_img | 14.jpg | 入力画像ファイル名 |

| --model | males_model | females_model(女性) / males_model(男性) |

!python dlfs_test.py --input_img okegawa_m1.jpg !python dlfs_test.py --input_img yaoi_m1.jpg !python dlfs_test.py --input_img nitta_m2.jpg !python dlfs_test.py --input_img izutsu_m2.jpg・結果画像(「DLFS/results」フォルダに作成される)

# -*- coding: utf-8 -*-

##------------------------------------------

## DLFS test program Ver 0.01

## Disentangled Lifespan Face Synthesis (ICCV 2021)

## DLFSで、人間の年齢による顔の変化をシミュレーションする

## http://cedro3.com/ai/dlfs/

##

## 2023.11.02 Masahiro Izutsu

##------------------------------------------

## Official DLFS https://github.com/SenHe/DLFS#training-and-testing-link-to-the-pretrained-models-in-the-colab

##

## dlfs_test.py

# インポート&初期設定

import os

import argparse

from collections import OrderedDict

from options.test_options import TestOptions

from data.data_loader import CreateDataLoader

from models_distan.models import create_model

import util.util as util

from util.visualizer import Visualizer

def convert_mp4(out_path):

import subprocess

import shutil

shutil.copy(out_path, './results/out.mp4')

if os.path.exists('./output.mp4'):

os.remove('./output.mp4')

cmd = 'ffmpeg -i ./results/out.mp4 -vcodec h264 -pix_fmt yuv420p output.mp4'

try:

subprocess.call(cmd, shell=True)

print("convert complete.")

except subprocess.CalledProcessError as e:

print("convert error")

print(e)

def main(args):

opt = TestOptions().parse(save=False)

opt.display_id = 0 # do not launch visdom

opt.nThreads = 1 # test code only supports nThreads = 1

opt.batchSize = 1 # test code only supports batchSize = 1

opt.serial_batches = True # no shuffle

opt.no_flip = True # no flip

opt.in_the_wild = True # This triggers preprocessing of in the wild images in the dataloader

opt.traverse = True # This tells the model to traverse the latent space between anchor classes

opt.interp_step = 0.05 # this controls the number of images to interpolate between anchor classes

data_loader = CreateDataLoader(opt)

dataset = data_loader.load_data()

visualizer = Visualizer(opt)

# 年齢による顔アニメーション作成

opt.name = args.model # females_model'あるいは'males_model'を選択する

model = create_model(opt)

model.eval()

img_dir = args.input_dir # フォルダー指定

img_file = args.input_img # ファイル名

img_path = img_dir+'/'+img_file

data = dataset.dataset.get_item_from_path(img_path)

visuals = model.inference(data)

os.makedirs('results', exist_ok=True)

out_path ='results/'+img_file[:-4]+'.mp4'

visualizer.make_video(visuals, out_path)

convert_mp4(out_path) # ffmpegでコーデック変換(MP4V→H264)

if __name__ == '__main__':

parser = argparse.ArgumentParser()

# test arguments

parser.add_argument('--input_dir', type = str, default='images', help = 'Input image directory')

parser.add_argument('--input_img', type = str, default='14.jpg', help = 'Input image file')

parser.add_argument('--model', type = str, default='males_model', help = 'females_model / males_model')

args = parser.parse_args()

main(args)

!python dlfs_test.py --input_img 01.jpg --model females_model !python dlfs_test.py --input_img 03.jpg --model females_model !python dlfs_test.py --input_img 08.jpg --model females_model !python dlfs_test.py --input_img f_tsuchiya_1.jpg --model females_model・結果画像(「DLFS/results」フォルダに作成される)

!python dlfs_test.py --input_img 11.jpg !python dlfs_test.py --input_img 12.jpg !python dlfs_test.py --input_img 13.jpg !python dlfs_test.py --input_img 14.jpg・結果画像(「DLFS/results」フォルダに作成される)

PukiWiki 1.5.2 © 2001-2019 PukiWiki Development Team. Powered by PHP 7.4.33. HTML convert time: 0.018 sec.